State of the art in AI and Machine Learning – highlights of papers with code

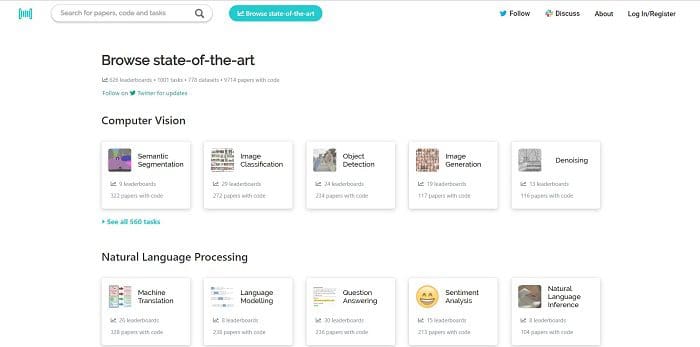

We introduce papers with code, the free and open resource of state-of-the-art Machine Learning papers, code and evaluation tables.

As any Machine Learning, AI or Computer Scientist enthusiast will know, finding resources and papers on subjects you’re interested in can be a hassle. Often you’re required to sign up to a website and some will even try to charge you a subscription fee for reading the work of others.

The mission of Papers With Code is to create a free and open resource with Machine Learning papers, code and evaluation tables.

We believe this is best done together with the community and powered by automation.

We've already automated the linking of code to papers, and we are now working on automating the extraction of evaluation metrics from papers.

On top of this, they have their own slack channel and they allow users to download all of the data that helps run the site. They’re open to contributions, so feel free to dive in on and keep the community growing!

In celebration of this great resource, we’ve attempted to summarise 6 of our favourite topics (what the website calls “tasks”) on the site and some of the papers they offer:

Semantic Segmentation

The concept of semantic segmentation is to recognize and understand what is in an image at the pixel level. This is one of the biggest categories in terms of content on the site, with 322 papers with code. The most popular of these is entitled Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. This paper aims to combine the advantages of both methods of semantic segmentation: Spatial pyramid pooling module and encode-decoder structure. The paper is accompanied with a publicly-available reference implementation of the proposed models in TensorFlow.

Other top papers on Semantic Segmentation:

MobileNetV2: Inverted Residuals and Linear Bottlenecks

Rethinking Atrous Convolution for Semantic Image Segmentation

NLP

Natural Language Processing is one of the biggest collections of tasks on the site, with subsections on Machine Translation, Language Modelling, Sentiment Analysis, Text Classification and many others. One the most popular categories within this is Question Answering, with over 200 papers on the subject. The top ranked paper on this subject is by Jacob Devlin, Ming-Wei Chang, Kenton Lee and Kristina Toutanova and is titled BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. The paper introduces a new language representation model called BERT, which stands for Bidirectional Encoder Representations from Transformers. Unlike recent language representation models, BERT is designed to pre-train deep bidirectional representations by jointly conditioning on both left and right context in all layers.

Other top papers on NLP:

Exploring the Limits of Language Modeling

Semi-Supervised Sequence Modeling with Cross-View Training

Can Active Memory Replace Attention?

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

Transfer Learning

Transfer learning is a methodology where weights from a model trained on one task are taken and used either:

- To construct a fixed feature extractor

- As weight initialization and/or fine-tuning

The most popular paper on transfer learning is Semi-supervised Knowledge Transfer for Deep Learning from Private Training Data. This paper sets out to solve the problem that affects models using private data, in that a model may inadvertently and implicitly store some of its training data and that subsequent careful analysis of the model may therefore reveal sensitive information. To address this problem, the paper demonstrates a generally applicable approach to providing security for sensitive data: Private Aggregation of Teacher Ensembles (PATE). The approach combines, in a black-box fashion, multiple models trained with disjoint datasets, such as records from different subsets of users. This paper also includes a link to the GitHub repo with all the code in TensorFlow for this project.

Other top papers on Transfer Learning:

Large-scale Simple Question Answering with Memory Networks

DeCAF: A Deep Convolutional Activation Feature for Generic Visual Recognition

Tencent ML-Images: A Large-Scale Multi-Label Image Database for Visual Representation Learning

Bag of Tricks for Image Classification with Convolutional Neural Networks

Multi-task learning

Multi-task learning aims to learn multiple different tasks simultaneously while maximizing performance on one or all of the tasks. The paper with the most stars currently is: DRAGNN: A Transition-based Framework for Dynamically Connected Neural Networks. This work presents a compact, modular framework for constructing novel recurrent neural architectures. Again, this paper also features a full working code example in TensorFlow.

Other top papers on Multi-task learning:

Semi-Supervised Sequence Modeling with Cross-View Training

Learning General Purpose Distributed Sentence Representations via Large Scale Multi-task Learning

A Hierarchical Multi-task Approach for Learning Embeddings from Semantic Tasks

Recommendation Systems

A recommendation system aim is to produce a list of recommendations for the user. A popular paper in this category is Training Deep AutoEncoders for Collaborative Filtering by Oleksii Kuchaiev and Boris Ginsburg. This paper suggests a novel model for the rating prediction task in recommender systems which significantly outperforms previous state-of-the art models on a time-split Netflix data set. The model is based on deep autoencoder with 6 layers and is trained end-to-end without any layer-wise pre-training. This is an NVIDIA research project in PyTorch with all the code used available for public use.

Other top papers on recommendation systems:

fastFM: A Library for Factorization Machines

AutoInt: Automatic Feature Interaction Learning via Self-Attentive Neural Networks

Product-based Neural Networks for User Response Prediction over Multi-field Categorical Data

DeepFM: An End-to-End Wide & Deep Learning Framework for CTR Prediction

Of course, we have just touched the surface on what Papers with code have to offer. We hope you love the site as much as we do!

Resources:

- On-line and web-based: Analytics, Data Mining, Data Science, Machine Learning education

- Software for Analytics, Data Science, Data Mining, and Machine Learning

Related: