The Essence of Machine Learning

The Essence of Machine Learning

And so now, as an exercise in what may seem to be semantics, let's explore some 30,000 feet definitions of what machine learning is.

I thought I would end off the year with a not-so-serious post about capturing the essence of machine learning. In the past, you have undoubtedly explored a variety of in-depth and semi in-depth offerings on what machine learning is, and explored its relationships to numerous other topics. Starting from some initial common point of reference when discussing such complex concepts is always a good idea; the problem is, there exist innumerable initial common points of reference for a topics such as machine learning.

So I thought, why not examine some of these points of reference?

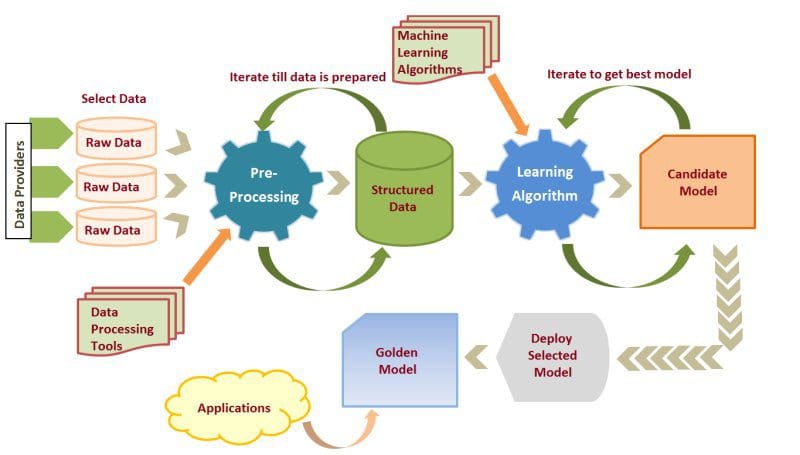

Source: Imarticus

And now, without further ado, as an exercise in what may seem to be semantics, let's explore some 30,000 feet definitions of what machine learning is.

Tom Mitchell

The first definition, my personal favorite, comes from renowned computer scientist, machine learning researcher, and Carnegie Mellon professor Tom Mitchell.

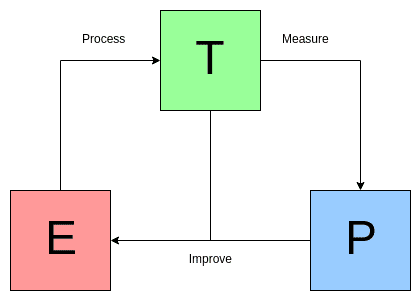

A computer program is said to learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, as measured by P, improves with experience E.1

Mitchell's quote is well-known and time-tested in the world of machine learning, having first appeared in his 1997 book. The sentence has been influential on me, personally, as I have referred to it numerous times over the years and referenced it in my Master's thesis. The quote also features prominently in Chapter 5 of the much more recent and authoritative "Deep Learning" by Goodfellow, Bengio & Courville, serving as the jumping off point for the book's explanation of learning algorithms. See Figure 1 below for an interpretation of the so-called Mitchell Paradigm.

Figure 1. The Mitchell Paradigm, visualized (source)

Ian Goodfellow, Yoshua Bengio & Aaron Courville

Speaking of Goodfellow, Bengio & Courville, and "Deep Learning," here is how machine learning has been defined in the pages of that book.

Machine learning is essentially a form of applied statistics with increased emphasis on the use of computers to statistically estimate complicated functions and a decreased emphasis on proving confidence intervals around these functions[.]2

Mitchell's definition of machine learning was removed from application; it focused on the specific components of the optimization process which is normally associated with machine learning, yet it did not prescribe how it should be to approached, practically. The definition in "Deep Learning," shown above, is far more prescriptive in nature, noting that computing power is leveraged (the use of which is, in fact, emphasized) while the traditional statistical concept of confidence intervals is de-emphasized.

Ian Witten, Eibe Frank & Mark Hall

Another machine learning source of particular note for me is the book "Data Mining: Practical Machine Learning Tool and Techniques," by Witten, Frank & Hall, which was the first book on the subject I read comprehensively. Light on math but packed with intuition and explanations with a practical bent, "Data Mining" was long my go-to (probably biased) suggestion for newcomers to the field of machine learning.

Their initial pursuit of a definition of machine learning is a bit scattered, and attempts to weave together the concepts of learning, performance, and knowledge in the contexts of both machine learning and data mining. Tangents are gone off on, but a few select quotes from the ride are shown below.

[W]e are interested in improvements in performance, or at least he potential for performance, in new situations.

Things learn when they change their behavior in a way that makes them perform better in the future.

Learning implies thinking and purpose. Something that learns has to do so intentionally.

Experience shows that in many applications of machine learning to data mining, the explicit knowledge structures that are acquired, the structural descriptions, are at least as important as the ability to perform well on new examples. People frequently use data mining to gain knowledge, not just predictions.3

It is not necessarily notable that the term data mining is used as a complementary term to machine learning. The third edition of this text, from which this quote was taken, was published in 2011, when the term data mining had much more traction than it does now; removing references to data mining should still lead to a scenario where what is written above holds true for machine learning itself.

Anyhow, while they prefaced their diatribe with their wish to stray from the philosophical, Witten, Frank & Hall actually did a pretty good job of getting somewhat philosophical. It's actually a helpful few excerpts, however, in that it provides a different angle on a machine learning definition: while Mitchell focuses on the specific components of the optimization process, and Goodfellow, Bengio & Courville lean toward a more prescriptive definition noting the relative importance of computing power, this attempt at a definition focuses on what aspects of "learning" are analogous and important in the machine learning process. The selections also offer an important point which is actually just as practical as it is philosophical, in that it is noted, in the final paragraph, that both the acquired knowledge as well as the ability to use this knowledge are important aspects of machine learning (see both training and inference).

Christopher Bishop

Let's turn to one final text for its attempt at capturing a definition of machine learning, "Pattern Recognition and Machine Learning," by researcher Christopher M. Bishop. Of note, Bishop does not explicitly define the term early on, but does a pretty good job of implicitly providing an algorithmic-centric definition of machine learning (note that it is discussed in reference to a digit classification task).

The result of running the machine learning algorithm can be expressed as a function y(x) which takes a new digit image x as input and that generates an output vector y, encoded in the same way as the target vectors. The precise form of the function y(x) is determined during the training phase, also known as the learning phase, on the basis of the training data. Once the model is trained it can then determine the identity of new digit images, which are said to comprise a test set. The ability to categorize correctly new examples that differ from those used for training is known as generalization. In practical applications, the variability of the input vectors will be such that the training data can comprise only a tiny fraction of all possible input vectors, and so generalization is a central goal in pattern recognition.4

First, read nothing more into the reference to "pattern recognition" than the fact the we are discussing supervised machine learning, as opposed to unsupervised learning or reinforcement learning (or some other form of machine learning). Second, and more importantly, this is the only definition which provides a step-by-step treatment of what machine learning entails, however short those steps might be in this case. Also of potential interest, the ensuing page and half of Bishop's book outlines and incorporates a number of additional machine learning concepts, and ties them all together nicely, providing a readable introduction without getting bogged down in the math (much of the rest of the book takes care of this).

So there we have four approaches to defining machine learning: one which defines it abstractly in relation to the optimization process; another which is more prescriptive and notes the importance of computation in machine learning; a third that focuses on what aspects of "learning" are analogous and important in the machine learning process; and finally one which outlines machine learning from an algorithmic standpoint. None are incorrect, yet all are incomplete. It isn't just semantics; exploring what pioneers and esteemed researchers believe to be "machine learning" will expand how we come to define it ourselves.

References:

- Machine Learning, Tom Mitchell, McGraw Hill, 1997.

- Deep Learning, Ian Goodfellow, Yoshua Bengio & Aaron Courville, MIT Press, 2016.

- Data Mining: Practical Machine Learning Tools and Techniques (3rd ed.), Ian Witten, Eibe Frank & Mark Hall, Morgan Kaufmann, 2011.

- Pattern Recognition and Machine Learning, Christopher M. Bishop, Springer, 2006.

Related:

- A Concise Explanation of Learning Algorithms with the Mitchell Paradigm

- The Data Science Puzzle, Explained

- The Data Science Puzzle, Revisited

The Essence of Machine Learning

The Essence of Machine Learning