Text Mining 101: Mining Information From A Resume

Text Mining 101: Mining Information From A Resume

We show a framework for mining relevant entities from a text resume, and how to separation parsing logic from entity specification.

By Yogesh H. Kulkarni

Summary

This article demonstrates a framework for mining relevant entities from a text resume. It shows how separation of parsing logic from entity specification can be achieved. Although only one resume sample is considered here, the framework can be enhanced further to be used not only for different resume formats, but also for documents such as judgments, contracts, patents, medical papers, etc.

Introduction

Majority of world’s unstructured data is in the textual form. To make sense of it, one must, either go through it painstakingly or employ certain automated techniques to extract relevant information. Looking at the volume, variety and velocity of such textual data, it is imperative to employ Text Mining techniques to extract the relevant information, transforming unstructured data into structured form, so that further insights, processing, analysis, visualizations are possible.

This article deals with a specific domain, of applicant profiles or resumes. They, as we know, come not only in different file formats (txt, doc, pdf, etc.) but also with different contents and layouts. Such heterogeneity makes extraction of relevant information, a challenging task. Even though it may not be possible to fully extract all the relevant information from all the types of formats, one can get started with simple steps and at least extract whatever is possible from some of the known formats.

Broadly there are two approaches: linguistics based and Machine Learning based. In “linguistic” based approaches pattern searches are made to find key information, whereas in “machine learning” approaches supervised-unsupervised methods are used to extract the information. “Regular expression” (RegEx), used here, is one of the “linguistic” based pattern-matching method.

Framework

A primitive way of implementing entity extraction in a resume could be to write the pattern-matching logic for each entity, in a code-program, monolithically. In case of any change in the patterns, or if there is an introduction of new entities/patterns, one needs to change the code-program. This makes maintenance cumbersome as the complexity increases. To alleviate this problem, separation of parsing-logic and specification of entities is proposed in a framework, which is demonstrated below. Entities and their RegEx patterns are specified in a configuration file. The file also specifies type of extraction method to be used for each type of the entity. Parser uses these patterns to extract entities by the specified method. Advantages of such separation is not just maintainability but also its potential use in other domains such as legal/contracts, medical, etc.

Entities Specification

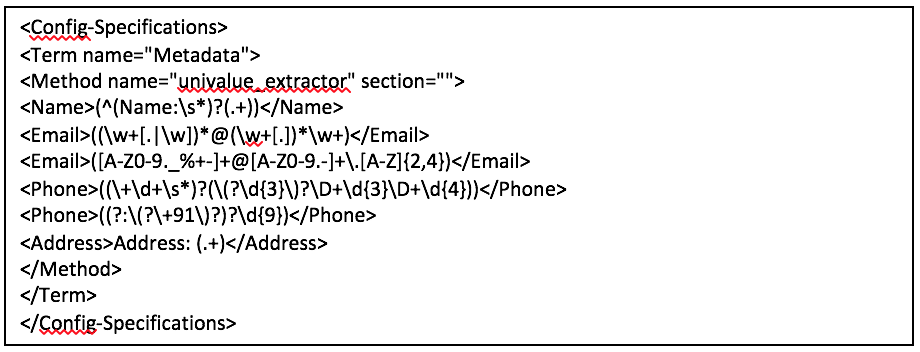

The configuration file specifies entities to be extracted along with their patterns and extraction-method. It also specifies the section within which the given entities are to be looked for. Specification shown in the textbox below, describes meta data entities like Name, Phone, Email, etc. Method used to extract them is “univalue_extractor”. Section within which these entities are to be searched is named “”, it’s a non-labelled section, like the initial few lines of the resume. Entities like Email or Phone can have multiple regular-expressions patterns. If first fails then the second one is tried and so on. Here is a brief description of the patterns used:

- Name: Resume’s first line is assumed to have the Name, with an optional “Name:” prefix.

- Email: Is a word (with optional dot in the middle) then “@”, then a word, dot and then a word.

- Phone: Optional International code in bracket, then digit pattern of 3-3-4, with optional bracket to the first 3 digits. For India number, it can be hard coded to “+91” as shown in the next entry.

- Python’s ‘etree’ ElementTree library is used to parse the config xml into internal dictionary.

- Parser reads this specifications’ dictionary and uses it to find entities from the text resume.

- Once an entity is matched it is stored as the node-tag, like Email, Phone, etc.

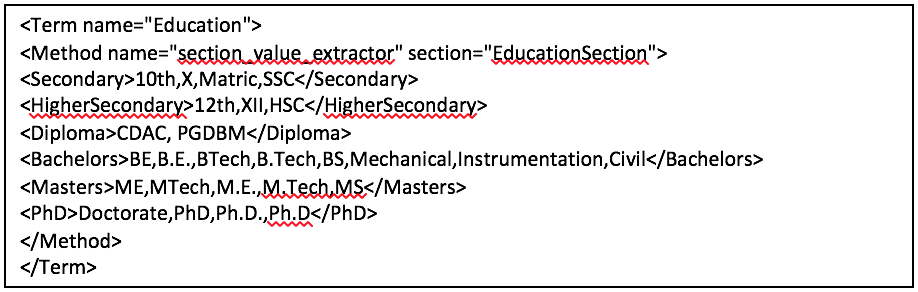

Like Metadata described above, Educational qualifications can be searched with a config below:

- Method “section_value_extractor” of the parser is to be used and within section “EducationSection”. It finds value within a section just by matching given words.

- If parser finds any of the words, say “10th“ or “X” or “SSC”, then those are the values extracted for the entity “Secondary”, describing Secondary School level education.

- If parser finds any of the words, say “12th” or “XII” or “HSC”, then those are the values extracted for the entity “HigherSecondary”, describing Higher Secondary School level education.

Segmentation

The sections mentioned in the above code snippets are blocks of text, labelled such as SummarySection, EducationSection, etc. These are specified at the top of the config file.

- Method “section_extractor” parses the document line-wise and looks for section headings.

- Sections are recognised by keywords used for its headings. Say, for SummarySection, keywords are “Summary”, “Aim”, “Objective”, etc.

- Once the match is found, state of “SummarySection” is set and further lines are clubbed under it, till the next section is found.

- Once the new heading matches, the new state of the next section starts, so on.

Results

A sample resume is shown below:

Entities extracted are shown below:

Implementation of the parser, along its config file and sample resume can be found at github.

End note

This article demonstrates unearthing of structured information from unstructured data such as a resume. As the implementation is shown only for one sample, it may not work for other formats. One would need to enhance, customize it to cater to the other resume types. Apart from resumes, the parsing-specifications separation framework can be leveraged for the other types of documents from different domains as well, by specifying domain specific configuration files.

Related:

Text Mining 101: Mining Information From A Resume

Text Mining 101: Mining Information From A Resume